Late last year when I discovered the new TSQL Enhancement introduced in SQL Server 2012, particularly the new Paging Function, I thought to myself that this would be very useful in a number of scenarios. Before I go any further, let’s have a look at what the Paging Function is.

Update: For simplicity, the following scenario uses [Production].[TransactionHistoryArchive] from AdventureWorks2012 and assumes that TransactionID is always continous and incremental as well as starting with TransactionID of 1. (I will discuss more on a scenario where the TransactionID is not continuous in a different post). Special thanks to Shy Engelberg for highlighting the behaviour differences in all three methods discussed in this post. Please read Shy’s comment at the end of this post for further clarification.

The Paging Function is part of the SELECT syntax, as an extension to the ORDER BY clause. Below is an example of its usage to retrieve a data set with TransactionID from 5001 and for the next 100 rows.

-- Paging Function -- Retrieve 100 rows starting from TransactionID of 5001 SELECT [TransactionID] ,[ProductID] ,[ReferenceOrderID] ,[ReferenceOrderLineID] ,[TransactionDate] ,[TransactionType] ,[Quantity] ,[ActualCost] ,[ModifiedDate] FROM [Production].[TransactionHistoryArchive] ORDER BY [TransactionID] OFFSET 5001 ROWS FETCH NEXT 100 ROWS ONLY

This function is very intuitive and would be applicable for returning data set a “page” (batch of rows) at a time. The OFFSET value can be expressed as an integer variable and so can the FETCH NEXT value, which allows easy and configurable data set paging for web/reporting applications.

A few things to note about the Paging Function:

- The ORDER BY column(s) doesn’t have to be consecutive, meaning that we can avoid creating a surrogate consecutive integer key for the purpose of paging. This helps in a typical query to retrieve a page of “active” Transaction records whereby some rows in the table may be deleted or “deactivated”, rendering broken IDs.

- OFFSET and FETCH can only be used in the last query that participates in UNION, EXCEPT or INTERSECT operation.

- If the column specified in the ORDER BY column(s) is not unique, the order of the output is not always consistent.

Alternative: TOP … Batching

In an earlier version, you could write a query to return the same data set using the TOP keyword. Please note that the SET ROWCOUNT clause to limit the number of rows returned in a SELECT query, will not be supported in the next version of SQL Server – and the TOP keyword should be used instead.

-- TOP... Batching -- Retrieve 100 rows starting from TransactionID of 5001 SELECT TOP 100 [TransactionID] ,[ProductID] ,[ReferenceOrderID] ,[ReferenceOrderLineID] ,[TransactionDate] ,[TransactionType] ,[Quantity] ,[ActualCost] ,[ModifiedDate] FROM [Production].[TransactionHistoryArchive] WHERE [TransactionID] >= 5001 ORDER BY [TransactionID]

Alternative: Direct ID Batching

An alternative to the above in an earlier version to SQL Server 2012 is shown below, The result will be the same, assuming TransactionID values are always consecutive.

-- Direct ID Batching -- Retrieve 100 rows starting from TransactionID of 5001 SELECT [TransactionID] ,[ProductID] ,[ReferenceOrderID] ,[ReferenceOrderLineID] ,[TransactionDate] ,[TransactionType] ,[Quantity] ,[ActualCost] ,[ModifiedDate] FROM [Production].[TransactionHistoryArchive] WHERE [TransactionID] BETWEEN 5001 AND 5100 ORDER BY [TransactionID]

By now, you may realise that the Direct ID batching method may need a surrogate consecutive key to ensure the batch/paging size is consistent, with the exception of the last page.

Execution Plan Comparison

Let’s inspect the execution plan of all three methods.

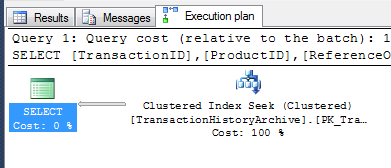

1. Direct ID Batching

As expected, here SQL Server is using Clustered Index Seek as per the index filter on the ID between the 2 values (5001 and 5100). Note that the Actual Number of Rows on the Execution Plan Details of the Clustered Index Seek is 100.

Clustered Index Seek on Production.TransactionHistory

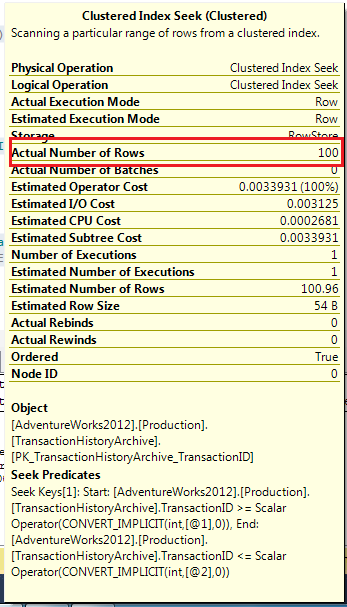

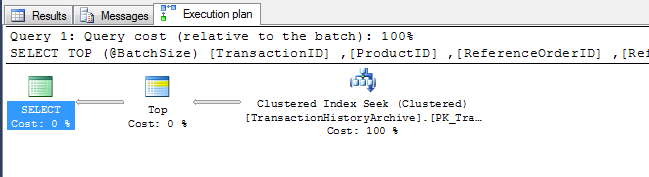

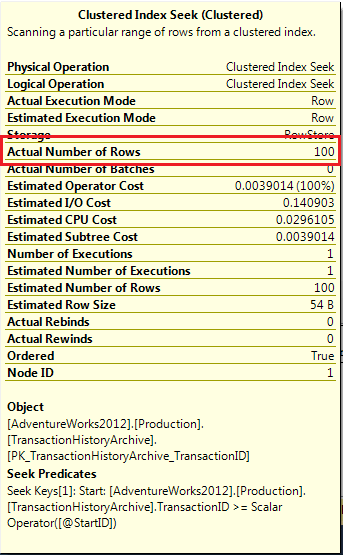

2. Top Batching

Similar to the Direct ID Batching, SQL Server is also using Clustered Index Seek. It then uses TOP as the next step. Note that here similar to Direct ID Batching, the Clustered Index Seek is also returning Actual Number of Rows of 100.

Clustered Index Seek on Production.TransactionHistory

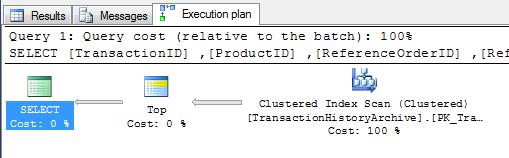

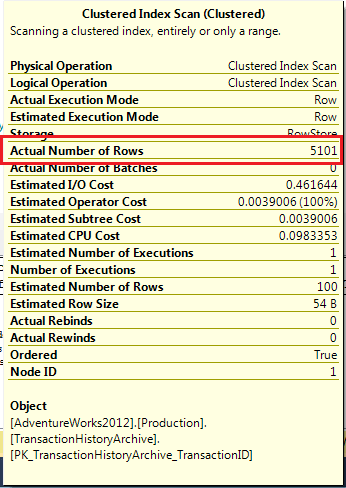

3. Paging Function

Surprisingly, SQL Server is using Clustered Index Scan and the Actual Number of Rows returned here is 5101!

Clustered Index Seek on Production.TransactionHistory

Performance Comparison

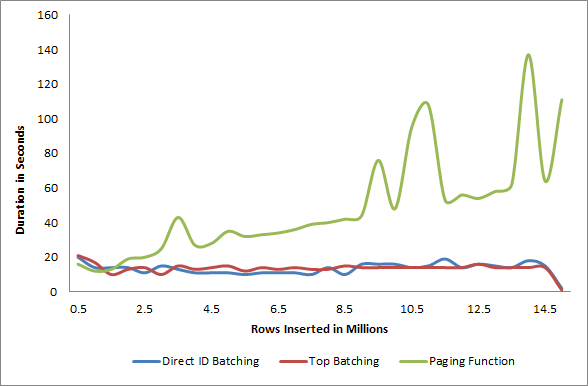

Using Paging Fuction on a source table with a large number of records may not be ideal. As you can already guess, the larger the offset size, the larger the Actual Number of Rows is returned too. Consequently, the Paging Function will take longer and longer as the paging progresses. To demonstrate this, I have a table with over 14.5 Million rows where I iterate through the records in a batch size of 500,000. Each iteration inserts the batched records into a heap table that is empty to start with.

Below is the chart exhibiting the time taken to insert the records in batches using Direct ID batching vs Top batching vs Paging function methods. To start with, the three techniques take about the same amount of time. After inserting about 1.5 Million rows the Paging Function query takes significantly longer than the other two.

Wrap Up

Although SQL Server 2012 new Paging function is intuitive for the purpose of paging a data set, it comes at a cost for large volume of data. The Top Batching and Direct ID Batching perform significantly better under similar circumstances, i.e. simple Source Data with continuous ID.

The above discussion stems from my recent research for the 24 Hours of PASS Spring 2012 session “High Volume Data Processing Without Driving Your DBAs Crazy!” which will be held on 21 March 2012 at 16:00 AEST. 24 Hours of PASS is free and contains 24 back-to-back great SQL Server sessions. You can find many different topics of SQL Server, from DBA, Database development, BI to Performance sessions. So, register now!

Disclaimer

Please note that every environment is different and that these tests are conducted on my small laptop and should not be used as an indication on how the above batching methods will perform in your Production environment. Please test and confirm that you understand these techniques before applying to your Production environment.

Last updated on 25 March 2012 to add further explanation on the scenario used in this post.

22 Responses

Hi Julie,

The performance results are surprise for me. I believed that SQL has done paging in a better way for consuming less resources. I’ve already been changing paging functions as I illustrated at http://www.kodyaz.com/sql-server-2012/sql-paging-in-sql-server-2012-sql-order-by-offset-fetch-next-rows.aspx

But what make sense here in your examples is that, in Direct Id batching input parameters lower bound and upper bound values are directly stıred in the index

But for other two methods, SQL engine knows the lower bound and should read following 100 records.

So what is especially interesting with these results is the difference between Top Paging and Paging Function

Hi Eralper,

Thanks for sharing the info.

Yes, I am surprised myself that the Execution Plan for the Paging Function is using Clustered Index Scan unlike the Direct ID and Top batching technique which are using Clustered Index Seek.

Julie

Perhaps it will be good to report this on Microsoft Connect at SQL sections

Great article!

Another option for paging is the OVER () function as described here:

http://msdn.microsoft.com/en-us/library/ms186734.aspx

Example:

USE AdventureWorks2008R2;

GO

WITH OrderedOrders AS

(

SELECT SalesOrderID, OrderDate,

ROW_NUMBER() OVER (ORDER BY OrderDate) AS ‘RowNumber’

FROM Sales.SalesOrderHeader

)

SELECT *

FROM OrderedOrders

WHERE RowNumber BETWEEN 50 AND 60;

This is being used in many places right now and renders even worse performance because the entire resultset is being returned by the CTE before the “paging” occurs.

Michelle.

Hi Michelle,

Thanks for the feedback. You’re right. CTE is being used very often in a scenario that you have mentioned and the behaviour is just as you have described.

It’s always good to know different techniques and the implications behind using them.

Julie

As a matter of fact, using CTE with OVER() is the only reliable way to do paging prior to SQL Server 2012, because identity columns are not guaranteed to be sequential. often rows are deleted and leave holes in the sequence, so that using just TOP or BETWEEN is naive.

Apologies for the delayed reply.

I agree that using TOP or BETWEEN for paging is naive (or not reliable).

Julie

I am curious why you think CTE has worse performance. I just tried comparing 2 simple queries on a table with 100 million records ordered by its clustered index, and the CTE performed several magnitudes better.

Hi Payal,

Thank you for investigating Michelle’s suggestion further. If you have the results of the execution plan that you could share with us, that would be great.

Kind regards,

Julie

[…] https://www.mssqlgirl.com/paging-function-performance-in-sql-server-2012.html […]

Hi,

I disagree the conclkusion and the comparison you did here in the post for the following reasons:

The alternatives TOP and DirectID return rows based on the value of the TrasactionID (They use it as a predicate in a WHERE clause). they will return diffrenet results than the OFFSET alternative in case the values of TransactionID are not starting from 1 or has gaps in them.

You can not compare two execution plans that might return different results, if you would use a WHERE clause in the OFFSET alternative as well, you would get an index seek and 100 affected rows as well.

the comparison is between two different operations that might return two different resultset.

Shy.

Hi Shy,

Thank you for the fantastic feedback.

In this post, I’m establishing a scenario where the TransactionID is continuous and the TransactionID starts with 1. You are absolutely right that the Paging function (OFFSET .. FETCH NEXT ..) will not return the same values as the DirectID batching method when there are gaps and when TransactionID does not start with 1. Using the TOP function, one could achieve similar result as the Paging function, but not always with ease. I think Paging function is more intuitive but have to be used with caution when retrieving data from a large number of rows due to the performance associated with it.

I will update the post shortly to clarify the scenario further.

Thank you again for the feedback. I believe it helps the readers understanding more about the Paging function as well as being more critical in understanding the scenario before using any of the methods discussed in the post.

Kind regards,

Julie

Julie – I’m a very casual user of SQL, and have been using the 2012 beta with paging support to simply populate a grid one page at a time.

While this works by using the OFFSET/FETCH NEXT, I feel that their implementation is lacking because they offer no way to retrieve the total number of records. I have a complex query, and find that there is no nice way to retrieve the count other than performing the same query twice or making a temp table.

There was a post on the microsoft connect site that better describes this: https://connect.microsoft.com/SQLServer/feedback/details/622246/sql-denali-add-total-rows-counter-to-select-statement

The statements by microsoft didn’t make sense to me, and I was wondering if you had any thoughts on this. It seems to me this is a common issue, and that this information should be readily available, but there seems to be no straightforward way to get it. Maybe I’m missing somthing? If you have any ideas, I’d appreciate any help, and thanks for posting.

Dennis

Hi Dennis,

Thanks for sending me a link to this issue.

From my experience as well as stated by Microsoft on the Connect site, I’m familiar with only one way to do this without issueing another query separate from the main paging query.

Using COUNT(*) OVER() with OFFSET/FETCH NEXT:

USE AdventureWorksDWDenali

GO

SELECT [EmployeeKey], [FirstName], [LastName], COUNT(*) OVER () TotalRowsInTable

FROM [dbo].[DimEmployee]

ORDER BY [EmployeeKey]

OFFSET 30 ROWS FETCH NEXT 10 ROWS ONLY

This statement when run on AdventureWorksDWDenali which has 296 rows in dbo.DimEmployee, will return:

EmployeeKey FirstName LastName TotalRowsInTable

———– ———- ——————– ———–

31 Kendall Keil 296

32 Paula Barreto de Mattos 296

33 Alejandro McGuel 296

34 Garrett Young 296

35 Jian Shuo Wang 296

36 Susan Eaton 296

37 Vamsi Kuppa 296

38 Alice Ciccu 296

39 Simon Rapier 296

40 Jinghao Liu 296

When performing batching in a loop, I usually find that I need to find the end point, either by

1. Knowing the total rows and keeping a counter in the loop to know that I’ve reached it. This is assuming that the source data that we’re iterating through doesn’t change or locked from changing (a common conundrum when issuing iteration/paging over data). In most cases, I perform a separate query to find the count of rows.

2. Processing until there is no more rows. You can issue @@ROWCOUNT to retrieve the number of rows processed in the last statement; if @@ROWCOUNT returns 0 then exit the loop.

Hopefully the above sample helps.

You’ve inspired me to write an article on this. So please stay tuned and let me know if there are any other questions that I may be able to help you with. I’ll include them in my next post.

Thanks,

Julie

This tortured example has little bearing on real-world paged queries.

In real-world systems that support paged data user interfaces, the records can be filtered, ordered, or joined in ways that make your entire argument moot, and even monotonically-increasing ID numbers are not guaranteed to be without gaps.

Microsoft admits this is currently a convenience feature, not really for performance, but if you’re going to do performance tests, at least make it an apples-to-apples comparison.

I agree.

The comparisons are not only meaningless, but also misleading. Is like comparing fuel efficiency of two cars coasting 20 miles downhill, without any obstacles, stops, etc., with another car climbing the same path.

Thirded.

The example is kept simple to prove a point. It is not meant to solve real world problems.

i dont find any positive results from performance perspective. i have executed the above mentioend TOP and PAGING function query and found huge difference in statistics

SEE

–TOP query

(100 row(s) affected)

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 4, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

–PAGING query

(100 row(s) affected)

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 41, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

That’s correct bhuvnesh. Paging query is not performing well against TOP query.

Thank you for providing more statistics comparing the two techniques.

Julie

Thanks Julie, you´ve helped me to choose better when to use one or another technique. About the performance comparision, at this time you´ve explained very well your scenario and using our brains we can understand the diferences between apples and whatever.

Thanks Julie, you´ve helped me to choose better when to use one or another technique. About the performance comparision