On Feb 11, 2017, I had an amazing opportunity to talk about Azure Data Lake (ADL) at SQL Saturday Melbourne, in front of over 50 attendees. The room was full and lots of energy; questions and interactions. In case you miss it, you can download my slide deck from here.

I’m super delighted to share some of the questions that the audience have asked. Also I have been able to follow-up / confirm the answers with the awesome ADL team – massive thanks Michael Rys (l | t) and Saveen Reddy (b | t).

Q&A

- Can I put spending (monetary) limit on my ADL job?

I recommend using Local ADL environment for smaller scale dev/testing. Once you are confident, you can migrate the relevant bits to ADL and use the portal to estimate the spending.

- What if my Dev accidentally ran a large batch of execution on ADL? I want to ensure that they don’t over spend”.

Here are a couple of options:- If you’re using MSDN Benefit subscriptions, there already is a default cap on your spending limit (read more about it here).

- For Pay-As-You-Go or EA (as outlined here), you can setup billing alerts. Currently, there is no monetary capping feature, but you can vote for it.

- Would limiting on resources minimize cost?

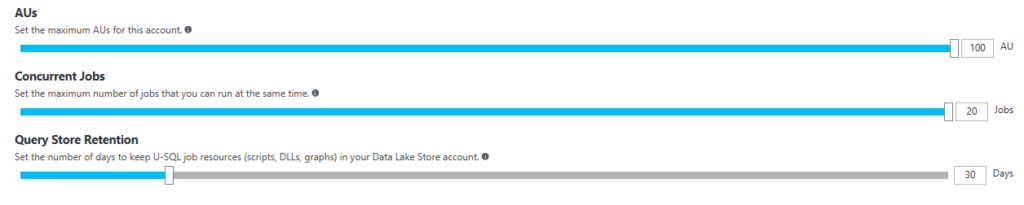

It depends. Under allocating Analytics Unit may incur more cost. Please refer to Understanding ADL Analytics Unit (AU) for more information. Current Data Lake Analytics default limit on resources is also available here. To modify the AU and concurrent jobs from the Azure Portal, go to the ADL account > Properties. Please also note that limiting resources may have impact on performance.

- Due to a number of regulations, my company needs the data center to be located in Australia. Is ADL data center available in Australia?

Currently, it isn’t. If you have a specific interest and an immediate need, please let me know! - You’ve shown DLL created using C# code to do cognitive vision. Would it be possible for me to use R instead?

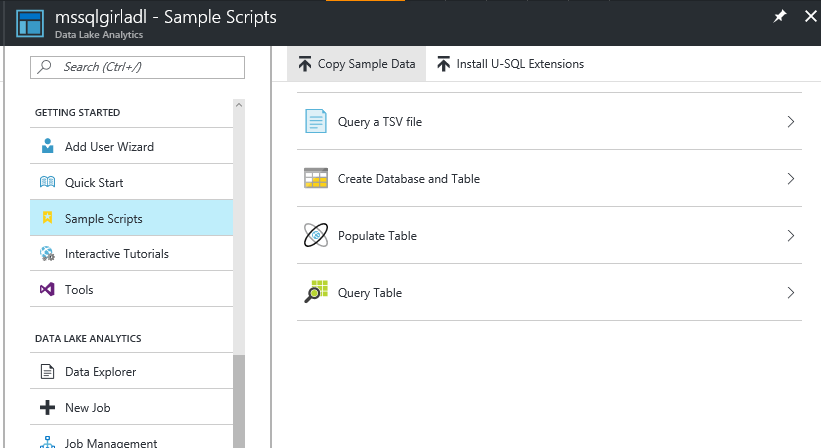

Yes, you can extend R and Python. The Sample script that is available from the Azure Portal has Getting Started Sample Scripts on how you can extend R in Azure Data Lake Analytics (ADLA).

You can also go through Erin Saka’s slide deck on using R and U-SQL together.

You can also go through Erin Saka’s slide deck on using R and U-SQL together. - Does the DLL that you show earlier in your demo (from Getting Started Sample Script) get smarter as more and more data you throw at it?

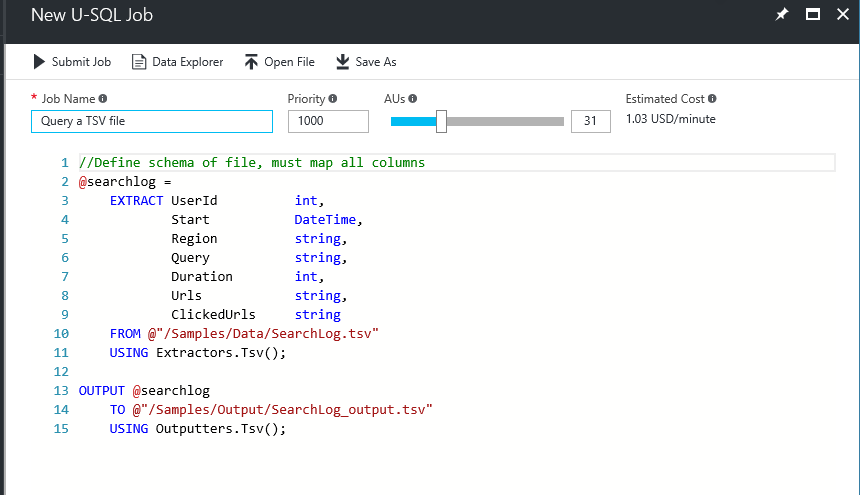

For the purpose of the demo, you’d have to retrain the model and push the newer DLLs separately. Having said that, it should be possible to operationalize retraining the model and pushing the changes out in good rhythms. - You’ve shown “EXTRACT” to read files in the demo. ADLA also supports inserting the extracted data into tables. When do you choose to read (extract) data from just storing it in a table?

If you need to do reuse the data, especially with some filtering, joining and grouping, it’s better to store them in tables than “extract”-ing over and over from files. For more details about U-SQL, have a look at Michael Rys’ awesome U-SQL deep dive slide deck presented at PASS Summit 2016.

That’s all for now. Till next time on more Big Data with ADL 🙂

Julie

@MsSQLGirl keeping the crowd captivated #sqlsatmelbourne pic.twitter.com/Jt7MHKopXp

— Warwick Rudd (@Warwick_Rudd) February 11, 2017

No responses yet